- Home

- News

- Recent News

- Design of AI Applications to Counter Disinformation

Design of AI Applications to Counter Disinformation as a Strategic Threat Within the Field of Cybersecurity

Romilla Syed, associate professor in the College of Management, and Stéphane Gagnon, at the Université du Québec en Outaouais, along with a team of researchers, are investigating the use of artificial intelligence (AI) to counter disinformation as a strategic threat within the field of cybersecurity.

Our team is focused on a very specific type of disinformation: false allegations against politicians in their governance roles. The project is supported by grants from the Human-Centric Cybersecurity Partnership (HC2P) (CAN$25,000) and Fonds de recherche du Québec (CAN$175,000).

Disinformation, whether in text, video, or image modality, can target public sector projects with the goal of tarnishing an otherwise well-functioning government initiative. These threats include false claims of conflicts of interest, nepotism, bribery, and corruption. The claims rely on assumptions and/or fabricated facts, both very difficult to verify beyond a reasonable doubt. Mismanagement of this disinformation can damage democracy for several years with the risk of irrecoverable trust in our institutions and leaders. It could cause projects or programs to be canceled, thereby depriving citizens of services and impeding national development. It could also lead to falsified decision-making processes, especially if referenced during congressional and parliamentary hearings or other intervention committees. Thus, our team focuses on the design of a formal model to monitor and detect misinformation and guide decision-making in the realm of political disinformation.

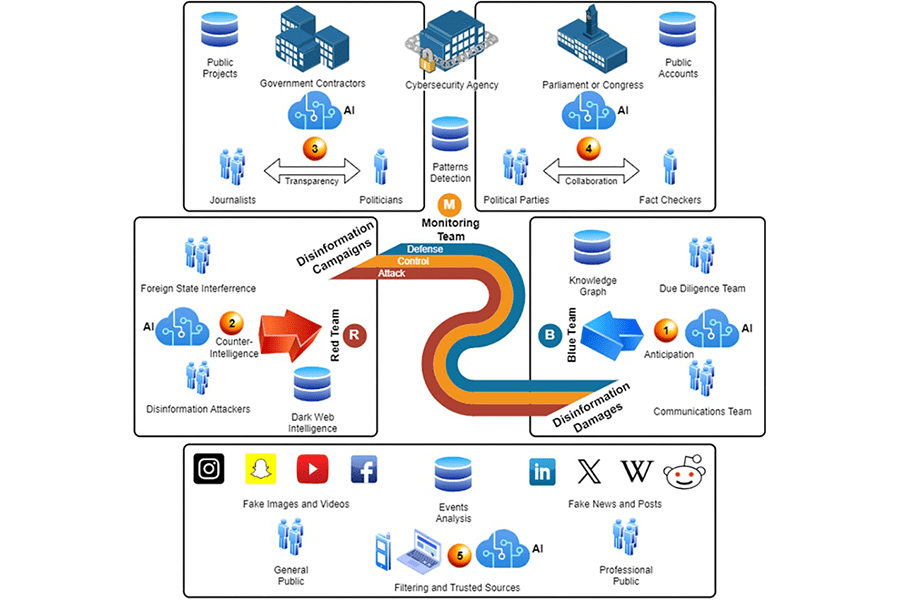

The diagram shows the wide scope of actors, information, and technologies involved. We identify five areas: (1) blue team fighting disinformation damages; (2) red team running disinformation campaigns; (3) government projects targeted; (4) parliaments where attacks are impacting; (5) public to whom disinformation is distributed. Cybersecurity agencies and monitoring teams are “in the middle.” The team is taking a “whole campaign” perspective to disinformation monitoring to provide at least five major functionalities: (1) anticipation of “next events” in a chain of fake news so as to help blue teams fight more strategically; (2) counterintelligence about dark web actors to deter and disarm; (3) transparency enhancement by linking public project information with politicians’ actions and formal news; (4) collaboration between political parties and their network of fact-checkers, ensuring no false information is propagated in parliaments or used for decision-making; (5) filtering and trusted sources systems to support end users, whether general public or professionals.

As of 2024, our team is collecting case study data on several corruption cases, some where allegations proved true, and others false. The team also develops new disinformation ontologies (formal machine-readable conceptual models) and knowledge graphs (KGs; a vast network of logical relationships, including decision rules) to help model disinformation campaigns and attack patterns, which will then be integrated into graph-augmented large language models (LLMs). Finally, a set of use cases is developed in collaboration with political communications experts, who will help identify how to use AI, especially KGs and LLMs, to overcome disinformation impact in their work and ensure faster and more accurate due diligence to solve corruption allegations against politicians.

Associate Professor of Information Systems